AI is surprisingly not a new concept.

In 1806, Russian mathematician Andrei Andreevich Markov created the Markov chain – a stochastic model of probabilities. However, this remained theoretical until the computing era began and early machine learning was developed during the 1960’s and 1970’s.

More recently, AI has exploded. In a recent article Exploding Topics stated that 82% of global companies are either using or exploring the use of AI in their organisation and the AI market is set to grow to 1.85 trillion by 2030.

However, with the innovation of AI can come the potential threat. AI has provided cyber criminals with a capability to increasingly use it to automate and enhance various attacks.

What do AI Cyber Attacks look like?

There’s six core ways that cyber-attacks can occur by the use of AI:

Deep Fake: There has been a significant rise in deep fake activity with cyber criminals being able to use AI to impersonate voices and videos of key business leaders to deceive individuals. AI technology can successfully be used to impersonate and extract money from organisations.

Social Engineering: Highly sophisticated emails, webpages and other content is now created which has significantly increased the success rate of tricking individuals. No longer can you spot a phishing email with spelling mistakes.

Prompt Injection Attacks: This does what it says on the tin. The threat actor is able to trick an AI system to perform unintended actions. This means that cyber criminals are able to use AI to obtain sensitive organisational data.

Reconnaissance: AI can be exploited to gather information on potential targets and, in doing so, can plan concise and targeted attacks.

Automated Exploitation of Vulnerabilities: AI can automate the process of discovering and exploiting vulnerabilities in any systems or apps. Cyber criminals can leverage machine learning techniques, they can identify weaknesses and implement large scale attacks.

Develop Malicious Code: And of course, AI can be used to develop malicious code – they don’t even need to skills to create it because AI will do the work. AI tools can create or modify code through the use of simple instructions; custom scripts and new malware can be developed which can be evolved to prevent detection.

What can CISO’s do to us AI to protect their cyber security?

All of these techniques can effectively bring an organisation to its demise, with significant financial loss and reputational damage. But what CISO’s and their cyber security teams must do now is focus on using AI as a defence mechanism for their organisation and collaborate with other departments leveraging AI to ensure that they cannot be infiltrated by cyber criminals.

That’s not to say this is not challenging for CISO’s. The rapid evolution of AI and its adaptability means organisations now face unprecedented risks; CISO’s and their teams must take a proactive rather than a reactive approach when dealing with AI.

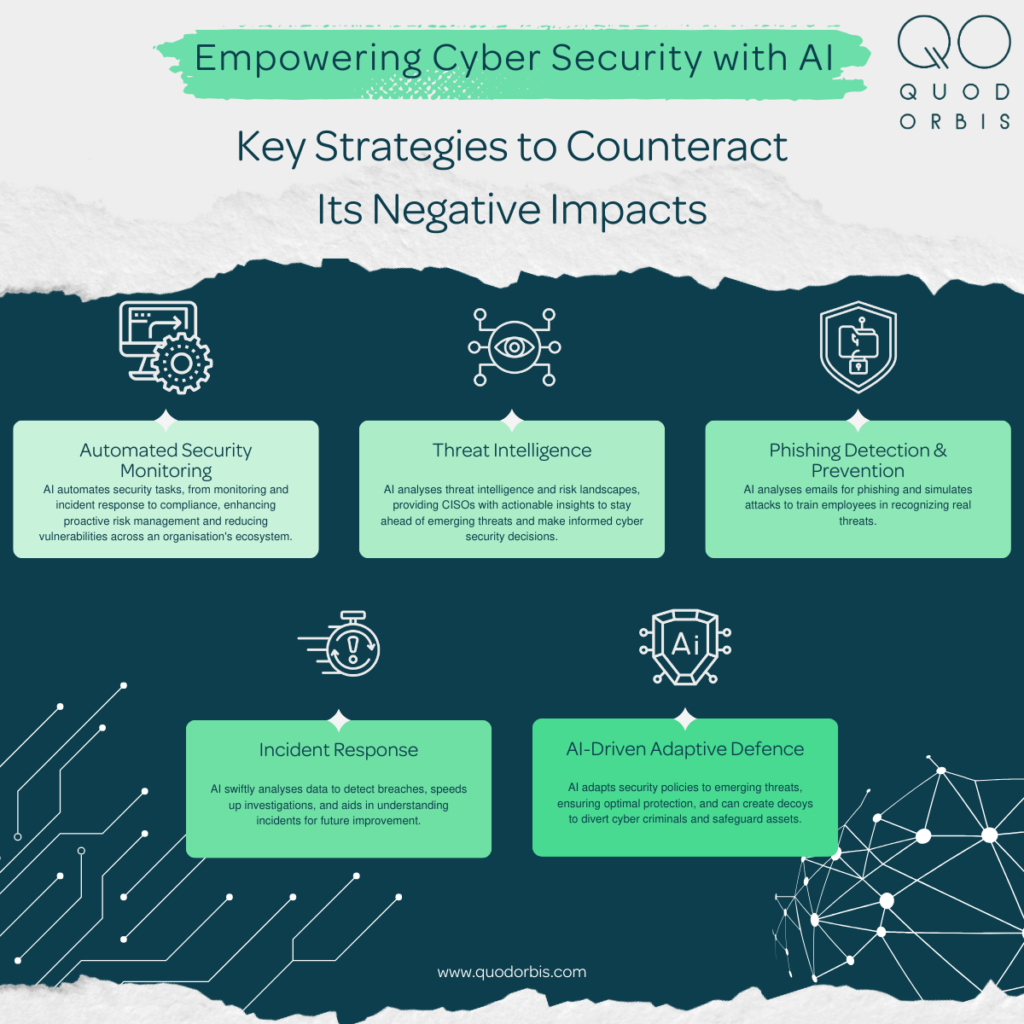

AI can be used to counteract its negative impact in several key ways:

Automated Security Monitoring: AI can be used to automate any security tasks. In fact, our own Continuous Controls Monitoring effectively uses AI to automate monitoring of an organisation’s entire ecosystem. However, AI can automate onboarding data sources, incident response, rapidly correlating security events and analysing them. Not only that, but AI can also identify the extent of the incident and suggest appropriate actions and generate alerts. This makes for a far more proactive approach to cyber risk and significantly minimises the vulnerabilities. The QO Platform also automates compliance monitoring assuring teams of continual compliance against any regulatory framework.

Threat Intelligence: AI can gather and analyse threat intelligence from various sources so CISO’s can obtain actionable intelligence to help them stay ahead of any emerging threats and adapt cyber security measures. It can also assess the risk landscape for more informed decision making.

Phishing Detection and Prevention: Emails can be analysed to detect any phishing attempts before they even reach employees. AI can also be used to train and simulate phishing attacks so that employees can be educated to recognise real phishing attempts.

Incident Response: AI can forensically analyse, quickly sifting through large volumes of data to identify a breach, speeding the investigation process and allowing for actions to be taken quickly. It can also support post-security incidents identifying the why, where and what to learn for future improvement.

AI-driven Adaptive Defence: There is also the capability to use AI to adapt to the emerging threat landscape. It can adapt security polices based on the level of threat, ensuring the highest level of protection for organisations. Not only that, AI has the potential to create decoys and traps for cyber criminals to divert and thus protect assets.

There’s no other choice; AI is here to stay

Whether organisations like it or not, they need to allow CISO’s to utilise AI technology to protect their organisations. If CISO’s are not able to leverage its capability to protect their organisations and be proactive in their cyber risk strategies it can be almost guaranteed that at some point, AI will be used against them and, if they are not ready, nor are they protecting their businesses utilising AI, then the results will be severely detrimental.